The Key Reasons for Indexing Issues in SEO

The Importance of Proper Indexation for Your Website

Proper indexation is a key health metric for every website. It increases the legitimacy and authority of your site and lets you acquire new customers for your business. On the other hand, improper indexation can lead to difficulties in customer acquisition. If you have unaddressed indexing issues on your site, you will lose out to competitors who already fixed those problems.

In other words, it’s impossible to attract visitors to your site and establish a steady flow of organic traffic without proper indexation. Not to mention, you can’t generate revenue if your website isn’t visible in search.

Even if you follow all the ‘rules,’ there are no guarantees that your content will be shown in search results. Ultimately, Google decides what should and should not be indexed. But to increase the chances of proper indexation, you should always:

- ensure your website has a clear structure

- add smart interlinking, avoid the orphan pages

- don’t forget to keep your XML sitemap up-to-date

- use manual or automated solutions to speed up the indexing

- publish and maintain high-quality content

- run regular tech SEO audits

Key Reasons for Indexation Issues

Identifying the underlying cause of an indexing issue can be tricky. So let’s explore why they occur, starting with content-related problems.

Content-Related Issues

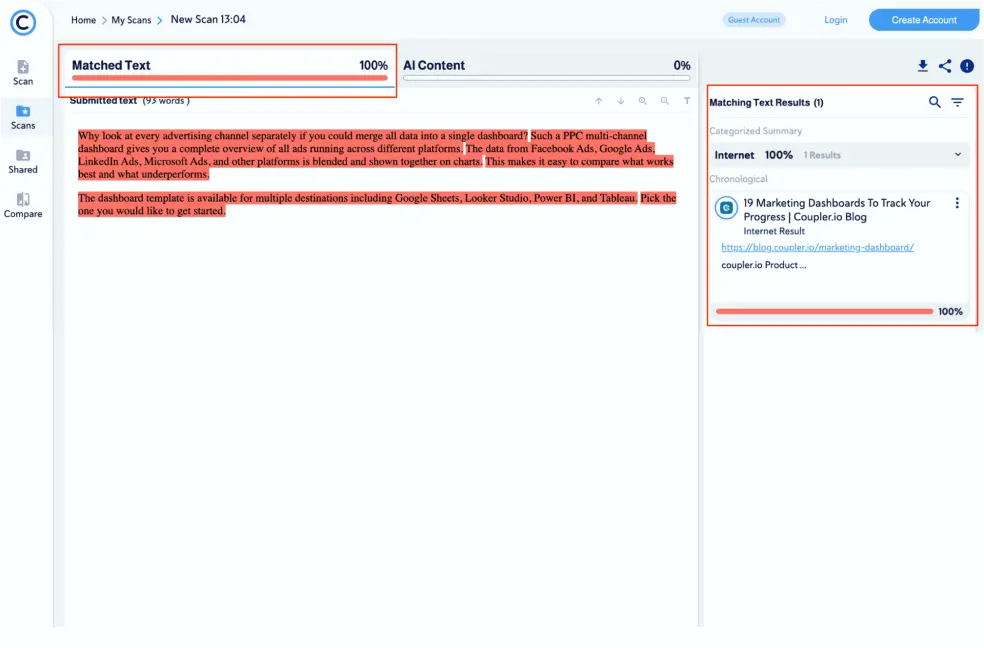

Duplicate or very similar content

Google has always taken a hard stance on duplicate content. So, if your content is a close or identical match to other material on the web, the bot may decide to disregard it. The same goes for spun or synonymized content. If your article shows the symptoms of a bad rewrite – unusual word choice, awkward phrasing – the crawler may refuse to index it.

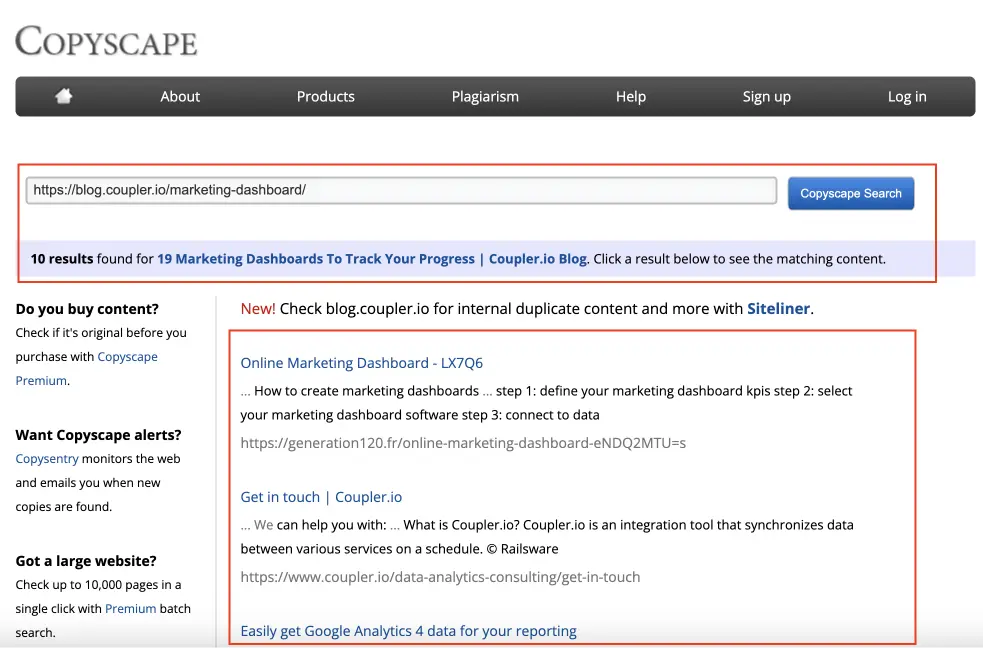

Content that offers fresh perspectives on a popular topic has a higher chance of being indexed. But to rule out blatant duplication in your content, use plagiarism checker tools like Copyleaks, Serpstat Plagiarism Checker, or Copyscape.

Low-quality content

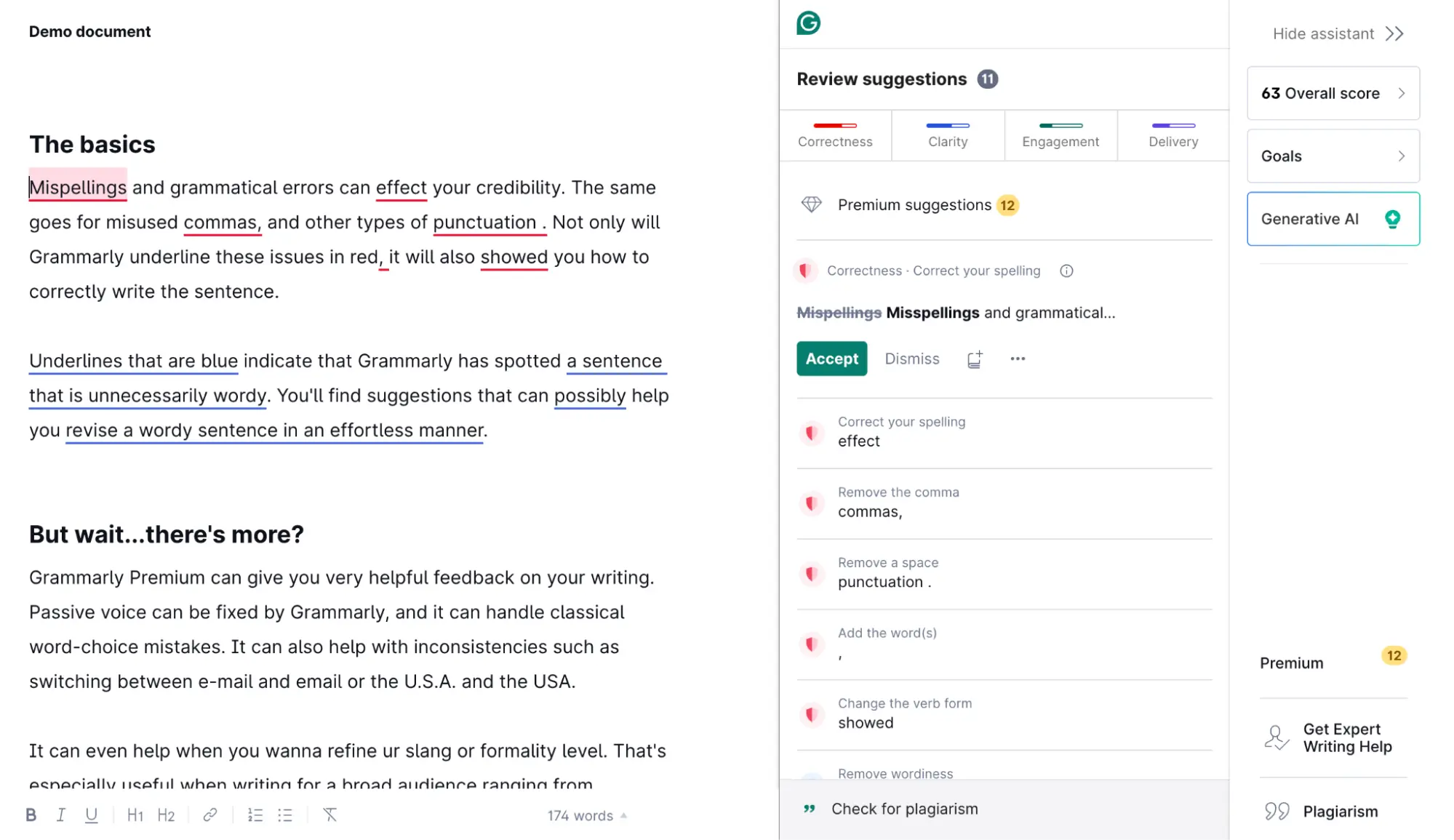

Indexing issues can be a sign of low-quality content. If your pages are riddled with grammar mistakes or your blog posts lack structure, Googlebot won’t see the point in indexing them.

But readability isn’t the only factor. For content to be considered high-quality, it must also provide value to the reader.

So, does your content answer the main question of a reader? Is it repetitive or boring? Is it fluffy or unfocused? Has it been written for machines instead of humans? If so, this might be why your content piece isn’t being indexed, and you could benefit from using a tool to assess content quality.

Grammarly is a great option. Apart from helping you eliminate grammar mistakes, it will make your copy clearer and more concise.

Technical Issues

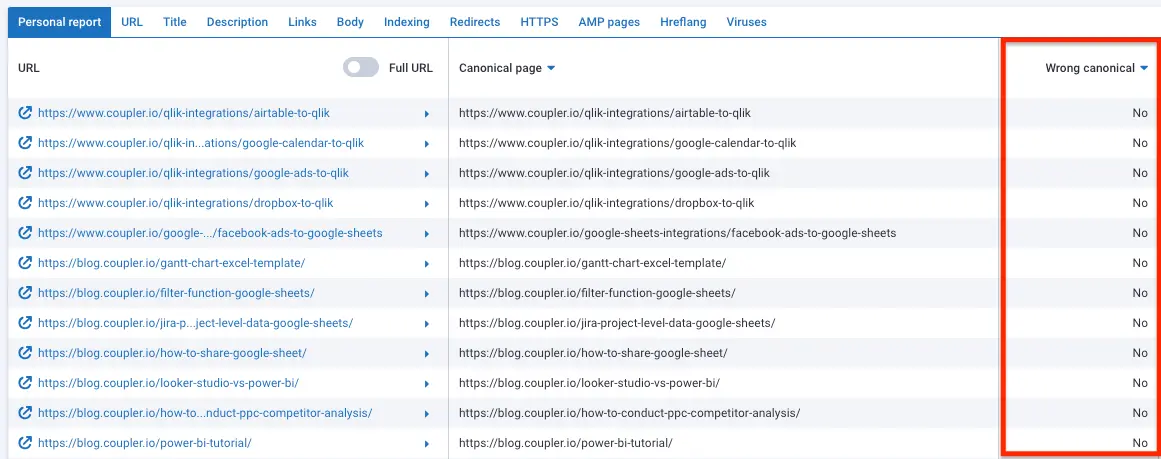

Wrong canonicals

The wrong or empty canonical on the page can lead to significant problems. This can be the reason for your indexing issue. The good news? It’s possible to validate canonicals with Serpstat’s site audit feature:

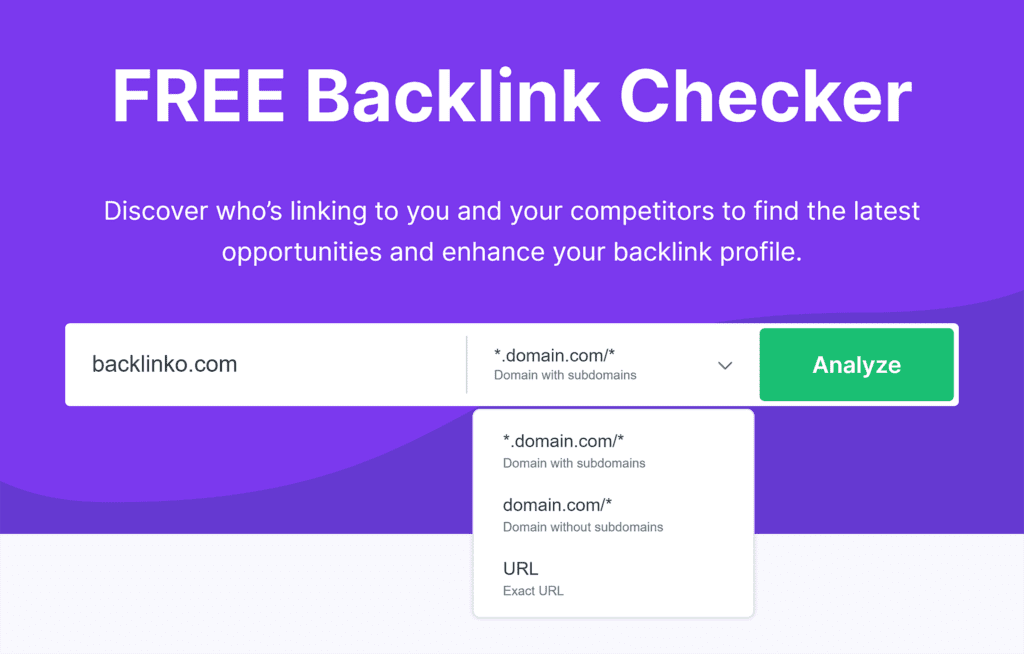

Orphaned pages

Pages with 0 internal backlinks is another common problem. Ideally, your site structure should resemble a tree, where each page has several internal backlinks. For instance, a typical pricing page would contain internal links to your ‘sign up’ and ‘contact us’ pages, among many others. This interconnectedness increases the visibility to crawlers and improves chances to be indexed.

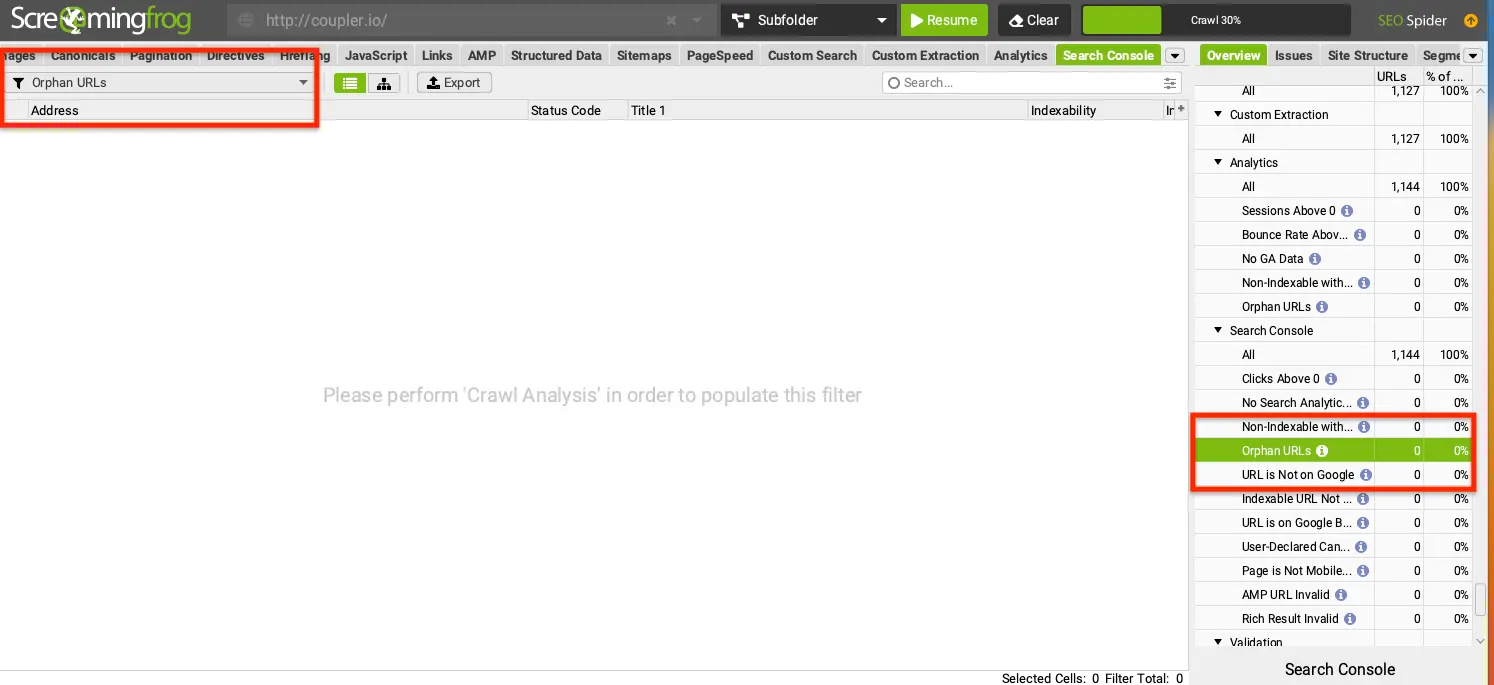

To quickly identify orphaned pages, use Screaming Frog Spider. It will allow you to crawl the whole domain and spot out the orphans.

Soft 404s

Another reason for indexing issues is the presence of soft 404s on your site. For example, this can happen when you have custom 404 pages that don’t return the suitable response code to Googlebot. Or when the page contains minimal content, the crawler mistakenly interprets it as soft 404. The problem for such pages is not being out of index but being indexed instead because crawlers will waste effort on unnecessary pages that users don’t need to see.

Check your Google Search Console (GSC) reports for URLs returning soft 404 errors. Depending on your specific case, you may need to change server configurations, make a redirect, or add new content to the page.

Crawl budget limits

Googlebot spends a fixed amount of resources crawling each website. If a big chunk of its budget goes to a handful of pages, there won’t be enough capacity for the crawler to capture other content on your site. So, make sure to have a proper website structure with smart interlinking, moderate the content you need to be crawled and avoid unnecessary content from being crawled by the search bots.

The overuse of the crawl budget can happen due to multiple reasons and this topic is broad enough for a separate article. Try using a website crawler tool like JetOctopus or Netpeak Spider to perform a full log analysis. It may provide you with some insights.

URL blocked by robots.txt or with a ‘noindex’ tag on the page

Maybe the crawler isn’t indexing your content because the page is telling Googlebot to ‘skip’ it. This could be due to robots.txt, telling Google the URL isn’t accessible, or an irrelevant ‘noindex’ tag. These are usually accidents or leftover safeguards from when your site was in development.

You can quickly check for these blockers manually by inspecting the robots.txt file or the page source code. If you have projects with a large number of pages, Serpstat can save you time.

Here’s an example from Serpstat’s indexing overview page: