10 Ways to Accelerate Web Page Indexing and Its SEO Effects

A website’s online visibility depends significantly on search engines. If your website is not getting indexed or it takes too long, your competitors can easily outperform you and expand their market share. A fast web page indexing process is, therefore, a no-brainer.

Although you might be reading this article because you’re having problems getting your new pages indexed by Google, it’s essential to understand how fast Google can pick up your updates and optimizations. This is even more important for enterprise websites, as your content is likely to change regularly.

Regardless, you want Googlebot to crawl, index, and rank your pages as fast as possible. This will allow you to see the results of your marketing efforts quicker and win an edge over your competitors.

So, in this guide, we’ll teach you 10 strategies to speed up your web page indexing process.

Effects of Slow Web Page Indexing to SEO and Business

Before we dive into how to accelerate your web page indexing speed, let’s discuss why low-speed indexing matters for your SEO rankings.

For a page to show up on Google SERPs, it needs to be crawled and indexed. Without being indexed, users can’t find you and your hard work will go to waste. The same impact also applies when Google only partially indexed your content. This prevents Google from reading all your SEO intentions (also known as missing content ), preventing you from shining on the top SERPs. And when Google indexes your pages at low speed, this will delay ranking, giving you a competitive disadvantage to stay relevant in search results.

🔍 Want to boost your SEO? Download the free technical SEO guide to crawl budget optimization and learn how to improve your site’s visibility on search engines.

For businesses that rely on online visibility, having negative impacts on their SEO performance means losing visibility, traffic, and sales. Furthermore, web page indexing issues don’t only occur to large websites (such as Walmart, whose 45% of their product pages aren’t indexed) but also to small websites.

Therefore, it is important for you to ensure your pages get 100% indexed and fast.

1. Eliminate Infinite Crawl Spaces

In the indexing process, Google will first crawl your site to find all URLs and understand the relationship between the pages (site structure). This process is basically Googlebot following each link in your files.

Related: Find out how Google indexing works and why Google indexing Javascript content differs from HTML files.

When all pages are internally linked (even if a page is only linked from one source), Google can discover all your URLs and use this information to index your site. However, sometimes these connections can lead to unforeseen problems like infinite crawl spaces. These are a sequence of near-infinite links with no content and trap Google crawlers into forever loops. A few examples of infinite crawl spaces are:

- Redirect loops – these are redirect chains that don’t have an ultimate target. In this scenario, Page A redirects to Page B > Page B redirects to Page C > and Page C redirects to Page A, and the loop starts all over again.

- Irrelevant paginated URLs returning a 200 status code – sometimes Google can use certain URL logic to speed up the process, but it also can create problems. For example, if you have a paginated series that returns a 200 status code, Googlebot will keep crawling all these URLs until it starts getting a 404 status code (which could be forever).

- Calendar pages – there will always be a next day or month, so if you have a calendar page on your site, you can easily trap Google’s crawlers into crawling all these links, which are practically infinite.

Fixing these issues will free Google to crawl your site without wasting your crawl budget on irrelevant URLs, allowing your pages to be discovered faster and, thus, get indexed more quickly.

2. Disallow Irrelevant (For Search) Pages

Your crawling budget is limited, so the last thing you want is for Google to waste it on pages you don’t want to be shown in search results. Pages like content behind login walls, shopping cart pages, or contact forms have no value for Google and are just consuming your crawl budget for no good reason.

Of course, these are not the only pages you should disallow. Think about what’s essential for search and what’s not, and cut everything that’s taking the attention away from the crucial URLs.

Using the ‘Disallow’ directive, you can disallow pages or even entire directories from your robots.txt file.

Here’s a directive example that will block crawlers from accessing any page within the contact directory.

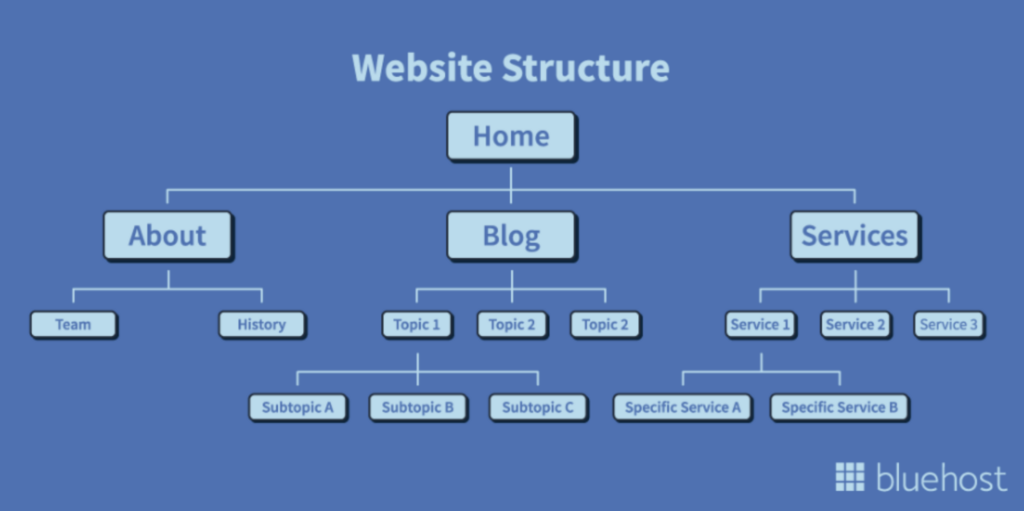

In the example above, you can see how the “about,” “blog,” and “services” pages are closer to the homepage, and everything linking from those is down in the structure. This is the vision to keep in mind when building internal links.

To index your pages, Google needs to understand what the page is about and its position in relation to the rest. Building content silos and well-defined categories will help you prevent orphan pages (which are almost impossible for search engines to find) and ease Google’s job of categorizing your pages to add them to its index.

6. Optimize Your Sitemap

Another great place to help your pages get discovered faster is your sitemap. This is a file that acts as a priority list, basically telling Google these are the URLs you want to be more frequently crawled and indexed.

However, when your sitemap has a lot of pages returning error statuses, redirects, or any kind of problematic URL, you set your site for failure.

To improve web page indexation times, you want to ensure only the most relevant pages are being added to your sitemap, namely:

-

- Pages you want Google to crawl and index

-

- URLs returning 200-code responses

-

- The canonical version of every page

-

- Pages that are updated somewhat frequently

A clean sitemap will help you gain higher visibility to new pages and ensure any updates or changes you make to your pages get indexed quickly.

7. Prerender JavaScript Pages and Dynamic Content

The problem with dynamic pages is that Google can’t access them as fast or as well as it accesses static HTML pages.

As you know by now, Google has a rendering step where it puts together your page to measure different metrics (like page speed and responsiveness) and understand its content before sending it to be indexed.

This process is fairly straightforward for HTML sites because all major blocks of content and links to other pages can already be found on the HTML file.

In the case of dynamic pages, Google has to use a specialized rendering engine with a Puppeteer instance to be able to download, execute and render your JavaScript, requiring more processing power and time.

For single-page applications and large sites using JavaScript to create interactive experiences and functionalities, crawl budget is even more scarce, in most cases consuming 9x more crawl budget than static pages.

All these roadblocks usually result in partially rendered pages, low rankings, and months of waiting for a page to get indexed – if it gets indexed at all.

Remember, if Google can’t render your content, it won’t be indexed.

To solve this bottleneck, Prerender fetches all your files and renders your page in its servers, executing your JavaScript and caching a fully rendered and functional version of your page to serve search engines. This cached version is 100% indexable, ensuring every word, image, and button is visible.

8. Remove Low-Quality Pages

Now that you have effectively improved your crawl budget and efficiency, it’s time to eliminate the deadweight holding your website back.

Low-quality pages are pages that do not contribute to your website’s success in search, conversions, or messaging. However, it’s important to understand that just because these pages are underperforming according to certain metrics doesn’t mean they are low-quality.

These pages are usually under-optimized, so SEO elements like title tags, descriptions, and headings don’t follow SEO best practices. In many cases, the page feels like a filler, not contributing to the website’s main topic. Before removing any page, here are some questions to ask yourself:

-

- Is this page bringing in traffic?

-

- Is the page converting visitors into paying customers?

-

- Is the page bringing in organic backlinks?

-

- Does the page help explain my core products/services?

-

- Is there a better URL I can redirect this page to?

-

- Does the page have any special functionality on my site?

Based on the answers to these questions, you’ll be able to determine whether it should be removed, merged, or reworked. (Note: Always remember that the main goal of this exercise is to reduce the number of pages Google has to crawl and index from your site. This process enables you to removing any unnecessary URLs and allocate these resources for indexing web pages that bring significant results.)

9. Produce Unique, High-Quality Pages

Just as low-quality content hurts your site indexability, producing high-quality content has the opposite effect. Great content tells Google you are a good and reliable source of information.

However, isn’t page quality subjective? Well, if you think about it in terms of writing style, maybe. But we’re talking about more than just the written information on the page.

For a page to be high-quality in terms of search, pay attention to:

1. Search intent

People use search engines to find answers to their questions, but different people use diverse terms and phrases to describe the same thing. For Google to offer the best content, it has to understand the core intention behind the query, and you can use the same logic to create a great piece of content.

To understand the search intent behind a keyword, you can follow these two steps:

First, put yourself in the searcher’s shoes. What information is most crucial for them and why. For example, if someone searches for “how to make Google index my page faster,” they’re probably looking for a list of actionable strategies they can implement.

A good piece of content that fits the search intent would be a guide or listicle that helps them achieve their ultimate goal.

The second step is to search for the query in Google and see what’s already getting ranked at the top of search results. This way, you can see how Google interprets the query’s search intent, helping you decide your piece’s format.

2. On-Page SEO Elements

Elements like title tags, meta descriptions, headings, internal links, images, and schemas, allow you to communicate what your page is about to search engines accurately. If it’s easier to categorize your page, then the web page indexing process will be faster.

You can follow our product page optimization guide as a baseline for your site’s optimization, even if it’s not an eCommerce.

3. Loading Speed

Site speed has a significant impact on user experience. Ensuring your pages and their elements load fast on any device is critical for SEO success, especially considering that Google has moved to a mobile-first indexing. In other words, Google will measure your site’s quality by its mobile version first. So, even if your desktop version is doing great, you’ll be pulled down if your mobile version is underperforming.

The most challenging part of improving mobile performance is JavaScript because phones don’t have the same processing capabilities as desktops and laptops. Therefore, we put together these seven strategies to optimize JS for mobile that can help you on your optimization journey.

4. Mobile-Friendliness

Besides loading fast, your pages must be responsive to be considered high-quality. Its layout should change based on the user’s device screen size.

Google can determine this by using its mobile crawler, which will render your page as it would appear on a mobile screen. If the text is too small, elements move out of the screen, or images pile up on top of the text, your page will score low and be deprioritized in rankings and indexation.

Having said that, there are other factors that influence your pages’ quality, but these are the four aspects with the most significant impact, so focus on these first.

10. Fix Web Page Indexing Issues Following Our Checklist

These strategies can only work if you don’t have deep indexation issues crippling your site.

To help you find and fix all indexation difficulties, we’ve built a site indexing checklist that’ll guide you from the most common to the more technically challenging indexing troubles many websites experience.

If you want to enjoy real SEO benefits and solve most indexing and JS-related SEO issues with a simple solution, use Prerender. By installing Prerender’s middleware on your backend and submitting your sitemap, we will start caching all your pages and serving them to Google, index-ready.

Try it by yourself and see how your indexing speed improves substantially. Sign up now and get the first 1,000 URLs for FREE!